If you have independent from an distribution you don’t have to think too hard to work out that , the sample mean, is the right estimator of (unless you have quite detailed prior knowledge). As people who have taken an advanced course in mathematical statistics will know, there is a famous estimator that appears to do better.

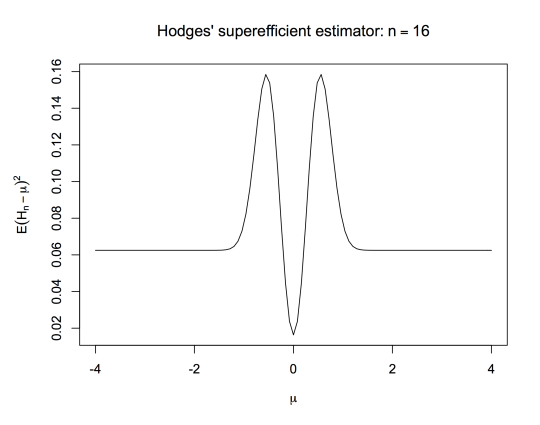

Hodges’ estimator is given by if , and if . If , for all large enough , so just as for . On the other hand, if , is asymptotically better than for and asymptotically as good for any other value of . Of course there’s something wrong with it: it sucks for . Here’s its mean squared error:

Even Wikipedia knows this much. What I recently got around to doing was extending this to an estimator that’s asymptotically superior to on a dense set. This isn’t new – Le Cam did it in his PhD thesis. It may even be the same as Le Cam’s construction (which isn’t online, as far as I can tell). [Actually, Le Cam’s construction is a draft exercise in a draft chapter for David Pollard’s long-awaited ‘Asymptopia’. And it is basically my one, so it’s quite likely that as a Pollard fan I got at least the idea from there.]

First, instead of just setting the estimate to zero when it’s close enough to zero, we can set it to the nearest integer when it’s close enough to an integer. Define if , with otherwise.

If is large enough, we can shrink to multiples of 1/2. For example, using the same threshold for closeness, if there is at most one multiple of 1/2 within . If there is at most one multiple of 1/4 within that range.

Define if and otherwise. This is well-defined if . For any fixed , satisfies if is a multiple of and otherwise.

The obvious thing to do now is to let increase slowly with . This doesn’t work. Consider a value for whose binary expansion has infinitely many 1s, but with increasingly many zeroes between them. Whatever your rule for there will be values of this type that are close enough to multiples of to get pulled to the wrong value infinitely often as increases. will be asymptotically superior to on a dense set, but it will be asymptotically inferior on another dense set, violating the rules of the game.

What we can do is pick at random. The efficiency gain isn’t 100% as it was for fixed , but it’s still there.

Let be a random variable with probability mass function , independent of the s. The distribution of conditional on is the distribution of . If for all , the probability of seeing infinitely often is 1, so we can look the limiting distribution of along subsequences with . This limiting distribution is a point mass at zero if is an integer, and otherwise. So, where

For a dense set of real numbers, and in particular for all numbers representable in binary floating point, has greater asymptotic efficiency than the efficient estimator The disadvantage of this randomised construction is that working out the finite-sample MSE is just horrible to think about.

The other interesting thing to think about is why the ‘overflow’ heuristic doesn’t work. Why doesn’t superefficiency for all fixed translate into superefficiency for sufficiently-slowly increasing ? As a heuristic, this sort of thing has been around since the early days of analysis, but it’s more than that: the field of non-standard analysis is basically about making it rigorous.

My guess is that for infinite is close to the superefficient distribution on the dense set only for ‘large enough’ infinite , and close to off the dense set only for ‘small enough’ infinite . The failure of the heuristic is similar to the failure in Cauchy’s invalid proof that a convergent sequence of continuous functinons has a continuous limit, the proof into which later analysis retconned the concepts of ‘uniform convergence’ and ‘equicontinuity’.